I have been many things.

They all lead to who I am now.

Xandra Granade (she/they), storyteller.

I have been many things.

They all lead to who I am now.

Xandra Granade (she/they), storyteller.

It's been almost two years since I've written an entry in this newsletter, despite it supposedly being weekly. In no small part, that owes to the fact that in 2023, I contracted COVID for the first time, and have since suffered immensely from long COVID symptoms. Learning to write, make art, and to put words out into the world with long COVID has also meant learning how my new body works, what it's limits are, and how to safely work within those limits while continuing to expand my limits in other ways.

Part of that has also been my recent experimentation in mesoblogging, using shorter-form text posts to keep writing as a practice. That too has been difficult, though, due to my setting a goal of posting a new mesoblog entry each day. Negotiating that with long COVID limits, writing classes, developing out personal writing projects, and the day-to-day rhythm of life in anticiation of and now in the reality of fascism all conspire to make daily schedules harder.

In parallel to the above, less than two weeks into the new Trump administration it has become appalingly clear that survival is going to be a long, hard slog, at least for the forseeable future. Survival is resistance, and that's critical in its own right, but survival is also the whole point of the thing: I have a right to exist, as do you. We resist fascism by surviving, and because fascism threatens our survival. Long, hard slog though it might be, the daily work of surviving is blessed, wonderful work. As the cliche goes, this is a marathon, not a sprint. For most of the first Trump administration, our family whiteboard had a single phrase written on the top: "survival is measured in days, not hours."

All this adds up to that I have learned that my previous structure of daily and weekly posts and paid subscriptions is not sustainable for me, my long COVID, and my other healthcare needs. Moreover, asking for a subscription is wildly hubristic when so many independent journalists and so many writers depend on their subscriptions for a living.

My fundamental goal with asking for subscriptions has not been to solicit donations, but to seek fair compensation for the labor I put into my words, and to in turn help enable financial independence from tech as an industry. My writing, however, is not limited to this newsletter; it is also expressed through micro- and mesoblogging, and I hope to soon complement that with shorter one-off self-published stories. Pursuing that broader goal by using the subscription model fundamentally limits, however, not only the form of my writing, but also the content. Every major payment processor that is feasible for me to use bans, for instance, sexual content under the same provisions as illegal materials. It is inescapable both that sexual content is, at its core, part of the human experience, and that there is a growing movement to label all queer existence as pornographic. Insofar as creation is an expression of humanity and resiliance in the face of fascism, even outright porn is a part of creation and should not be walled-off categorically.

Finally, I want whatever words that I share with the world to be as broadly accessible as possible. In an advertising and surveillence–driven world, that largely means that paywalls and the like are infeasible. Putting everything together, the most reasonable approach I have found to pursuing my own financial independence while also creating under the constraints of long COVID is to publish everything that I am able to for free, with no paywalls, with a link set up to allow readers to pay what they want for my labor — compensation, not donation. While that model isn't feasible for longer-form writing such as novellas, nor for work subject to traditional publication, I believe that at least for now, it's the right model for me to create art in a way that values my own creative labor.

For now, I'll be using my Ko-fi page for my pay-what-you-want model, please feel free to drop by there if my writing has been helpful to you! Thank you so much for your support.

The world is ending, of that there can be no doubt. Everything is finite, and nothing finite can last forever. That ending may be entirely out of human reckoning, or we may fall to any of the existential crises that we face; climate change not the least of them. There's good reason to be optimistic, not to mention the argument that we have a moral responsibility to resist doomerism. Nonetheless, doom is always possible, and acknowledging that possibility is at best uncomfortable and at worst despairing.

When faced with existential dread and uncertainty, turning to fiction to help us understand, cope, and confront our own feelings is one of the most human things we can do. There is absolutely a moral dimension to how we do so, but fiction that admits the real possibility that the world might end — eschatological fiction — can help us not feel so alone with that existential dread.

Personally, this is part of what has long drawn me to the Final Fantasy series of games. Right there in the title, the series always asks the player to consider the very real possibility of the end of the world through fantastical analogy after fantastical analogy. Now in its sixteenth main iteration, the series has long explored eschatology through different metaphors and literary tropes. Final Fantasy X showed a world brought to the brink by unprocessed generational trauma, while Final Fantasy VII showed a world on the precipice of annihilation caused by the unchecked machinations of a single energy company. Sometimes, the series points inward; Final Fantasy XIII-2 asks us to come to terms with our own culpability in diaster, and to look directly at our own need to be acknowledged as a moral good in the world — to understand how that can obscure the costs of our actions. In its latest expansion, Final Fantasy XIV even directly takes on how eschatological stories such as those found across the series can fail to help us but can instead land us at overwhelming and all-consuming despair.

In its sixteenth and latest iteration, though, Final Fantasy has brought its focus further inward than ever before, directly placing its protagonist in opposition not only to the game's villain, but also in opposition to us as players. In this post, I'll explore my own thoughts on that radical shift in the series' focus, how I approach that shift as a fan, and why I think that change is so badly needed in the face of unrelenting technoutopianism that would bend our much-needed optimism into nowhere solutions.

This post is not, however, a review of the game. A review may simply conclude with "play it, it's a good game and well worth the cost" after extensive discussion of the game's technical merits, story, aesthetic qualities, and so forth. That is all good and necessary, but my goal here is rather opposite: to carefully consider what I got out of having played the game. I have made my decision to purchase and play the game, leaving me to now reckon with the effects of having done so.

Necessarily as a result, this post will be extremely spoiler-y. I will assume from here on out that you have either played Final Fantasy XVI in its entirety, or that you are not bothered by a pointed discussion of its plot. As this post deals extensively with the history of the series, I'll also issue a spoiler warning for anything with "final" and "fantasy" in its title.

Before I can explore what Final Fantasy XVI is, I must do due diligence in being clear about what the game is not. The game is fundamentally limited in some extremely important ways that do, sadly, undermine not only its own ideals but also cause actual harm in the real world. No exploration of the game's plot and impact is complete, or even truly started, without the recognition that FFXVI strongly revolves about a fantastical analogy for real-world slavery, but that somehow manages to omit all depiction of Black people who even today bear the generational brunt of that cruelty, compounded with new and continuing forms of oppression.

The conspicuous whiteness of the game may be best summed up in the character of Elwin Rosfield, monarch of the Rosarian nation. We are told continuously throughout play that Elwin is a true ally to the Bearers, the slave caste within the game's story, but we are left to simply assume this to be the case. From very early on, we are shown that Rosaria depends economically on the labor of its Bearers, and still treats them as property, albeit with less casual cruelty than its neighbors. That mere window dressing around the abhorence of slavery is all we are given as proof that Elwin has earned his stance as an ally until late in the game, where we learn that he had undertaken a generations-long project to dismantle Rosaria's slavery state despite political opposition by Rosaria's rich power brokers. Presumably, the Bearers are supposed to be thankful for this delayed and secret support, continuing to serve their owners for the decades that Elwin's project will take to free them.

In his capacity as the main protagonist, Elwin's son Clive Rosfield is at least significantly more active, taking charge of a network of Bearer liberation cells. Early in the story, Clive is taken captive by one of Rosaria's enemy states and made into a Bearer himself, brutally driven to serve his captors for thirteen years. FFXVI shows us how this motivates Clive to take the sword on behalf of his fellow Bearers, but doesn't bother asking us to reconcile with the complicated legacy left by his father, nor why it took thirteen years as a slave to be convinced of the cause.

These omissions are frankly racist, and in ways that undermine the game's key themes and impede its moral clarity. The game is absolutely weaker, perhaps even catastrophically so, for these problems, and should be criticized strongly on that basis. I am myself white, and thus not in the best position to give voice to these criticisms, but rather am obligated to listen to them carefully, without defensiveness, and to amplify them as best as possible. In that vein, I encourage you to read and watch the following treatments on racism in FFXVI:

With that in mind, and in full knowledge that the game is problematic, let me proceed to discuss what Final Fantasy XVI is and what it does accomplish with the sixty hours of attention it demands from its player.

Throughout the series, Final Fantasy has made extensive use of crystals as a visual and narrative motif. In Final Fantasy VII, crystallized essence of planetary life serves as the basis for all magic. In Final Fantasy XIII, people who achieve their divine tasks are turned to crystal until the gods need them next. In Final Fantasy XIV, the game beings with a crystal goddess exhorting us to "hear, feel, think." That we later learn that the selfsame goddess came into being by consuming half of the entire population of the ancient world, and that we later destroy what little is left of Her keeps us awake at night grappling with how much we depended on Her earlier benevolence, and with the complexity of what brought Her to commit the heinous acts that serve as the basis for the game's entire plot. In Final Fantasy IX, crystals are the source of all life, while in Final Fantasy Tactics, the dead return to crystal at the end.

Perhaps most notably, though, Final Fantasy Type-0 tells the story of a world divided into "crystal states," nations each founded around the magical powers granted by their respective crystals. Each country's crystal demands an extreme cost, consuming the memories of soldiers who depend on their powers, and locking the continent in eternal war. Fate in FFT0 is, at best, the crystals sustaining themselves through neverending conflict, a thin analogy for the real-world military–industrial complex. Peace is fundamentally incompatible with the existence of crystal states, as FFT0 shows a world entirely dependent on magical weapons powered by crystals.

It is in this tradition that Final Fantasy XVI builds its world of nations dependent on gigantic mountain-sized Mothercrystals not just for miliary dominance, but as energy sources for every aspect of daily life. An early scene shows court gardeners maintaining topiaries with Aero spells case from shards mined out of Mothercrystals and imported into Rosaria. Blacksmiths' forges are powered by Fire spells, and crops are irrigated with Water spells.

All the while, lands around Rosaria are increasingly becoming barren, falling to an ever-expanding Blight in which no crops can grow, no animals can thrive, and life comes to a silent end. Many of the game's monster enemies are simply animals displaced from Blight-striken lands, and much of the game's conflict comes from scarcity as refugees flee the Blight. A dozen or so hours into the game, we learn that the Blight is the toll exacted by the Mothercrystals, the void left behind as they drain magical energy from the lands. This revelation in turn is later displaced by the even more pointed truth that even absent the Mothercrystals, magic of any kind necessarily draws from the land and spreads the Blight; the Mothercrystals simply make it much easier to depend on magic, to trade magical energies as physical trinkets.

The analogy to real-world climate disasters is obvious, of course, cemented in quest names like "Inconvenient Truth." Where our capitalist societies depend seemingly inexorably on fossil fuels, the feudal states of FFXVI depend seemingly inexorably on crystalline blessings. Perhaps for the first time in the long-running series, the crystals are not only complex, but actively malevolent objects to be destroyed.

At this point, I could imagine stopping and writing a different essay about how the Mothercrystals serve as climate fiction, where they capture or fail to capture the complexities of our dependencies on fossil fuels, or how the game deeply interweaves its twin stories of liberation from oppression and independence from magic. That would be, I think, a valuable and necessary take on the impact of the game's narrative, but I want to focus on a different element instead: how Final Fantasy XVI directly implicates the player as being the origin of its dystopia.

Needless to say, the story expects the player to eventually succeed in destroying Ultima, creator both of all humankind and of the Mothercrystals that bring so much grief to the world. Following a battle so replete with Christian imagery that Ultima throws out attacks like "The Rapture" in between calling the player character "Logos," we're shown a scene presumably set some centuries later. In this last glimpse, we're shown two young boys struggling with everyday chores while wishing that they had the power of Eikons and magic to help, before cutting to the boys playing out scenes from the game as youthful make-believe. Before fading to black, the camera pans back to show the book of fairy tales that inspired the youthful play, immodestly titled Final Fantasy.

The strife of the game, the immense personal cost that Clive and his family paid to end their world's crystalline dependency, might ultimately fail to save the world. Even after succeeding in felling Ultima, humanity still yearns for the power and convenience of the Mothercrystals' blessing. In that closing, the game makes explicit the deconstruction that runs throughout its plot, denying us any hope of hiding in ignorance. In wanting this fantasy, even after being shown what it costs, we are the young children threatening to undo all that Clive worked for.

Looking back from though dismal lens, then, much of the plot becomes more clearly focused on the player and their actions. After all, the player continually compels Clive to use his magical abilities to defeat — to murder — score after score of enemies. Inhabiting his Eikon, Ifrit, is intensely traumatic for Clive, but we force him to do so merely by pressing both sticks in at once. The game takes every opportunity it can to show us the toll that Eikons have on their summoners' bodies, and yet it's a cheap and easy source of advantage in combat. Are we any better than the young boys at the end, wishing for an easy shortcut around difficulty in the form of magic?

It gets worse still when we consider Ultima's plan: to turn Clive into a perfect vessel for his power, erase his will, and conquer his body as an ultimate weapon. As the player, we advance Ultima's plan when we dive into menus to unlock new abilities and grow the power of Clive's Eikonic feats. We steer Clive towards Ultima's aims with our controllers, possessing him as completely as Final Fantasy VI's slave crowns. Clive may have escaped his fate as a Bearer, but we still control him as our own avatar.

In the end, then, the strict linearity of the game's plot isn't weakness, but is shown to be the characters resisting the player's influence. In the moment of his final victory over Ultima, Clive tells us that "the only fantasy here is yours, and we shall be its final witness." Cheesy and corny as the title drop is, Final Fantasy XVI uses it well (along with minor key–shifted versions of the series' iconic prelude) to make its deconstruction truly inescapable: victory necessarily requires destroying the Mothercrystals, resisting Ultima's dominance over the world, and ending the fantasy.

Here, I might well be accused of reading too much into the story, of injecting my own complicated feelings about video games and burdening the plot of Final Fantasy XVI with them. In defense, though, I will direct you back to the "Inconvenient Truth" side quest. Given how directly that title speaks to the game's climate fiction themes, one might reasonably expect the side quest to concern itself with how society came to depend on the Mothercrystals, or how society denies the reality of the Blight and its causes, but no: the quest concerns itself with how the Bearers first came to be enslaved. The true story of their oppression is considered heretical, as it would offend and upset modern political elites, a story beat that feels far too relevant as fascists like DeSantis work to rewrite American history to remove any mention of the US as an oppressor.

The quest is given to you by Vivian, a professor of history, and one of two characters responsible for providing you with a reference to the game's extensive lore. As you return the banned book to her, she delivers a monologue on how important stories are, how they shape the truth by shaping belief, and how there is no objective truth about history that can ever be determined except through belief. To Vivian, truth is the consequence of belief, and belief is the consequence of stories. As arbiter of the game's lore, and embodiment of many of its most pointed themes, she tells you that the game matters because its plot can shape your beliefs about the world. What to make, then, of the young boys who believe in the superiority of a world with magic, that drains the world to power their society?

Throughout, the game is in constant conversation with the tropes and trappings of the series, continually bring them under the same dismal lens. Jill, Clive's childhood friend turned comrade-in-arms, notes that your actions are causing monsters to become stronger — a commentary on the level grind of the series. Merchants are able to offer you their goods because they also profiteer from war. Ghyshal greens aren't just for chocobos, but are a part of the food cycle; chocobo stew is likewise a delicacy served at the local pub.

There is a constant feeling of examining and putting away one's toys, of making something new in their stead. In other Final Fantasy games, there is a tradition of a character named Cid granting you an airship, and with it, cheap and uninhibited travel across the world. In Final Fantasy XVI, however, Cid dies at Ultima's hands, and it is his daughter Mid who is tasked with making the realm's first airship. A brilliant inventor, Mid easily produces a toy model of the airship from Final Fantasy III, IV, and XIV. A cutscene shows Mid carefully considering her airship prototype before realizing that it could be used to drop bombs and to create more war orphans like herself. Instead of building the actual airship, for the first time in the series, she literally buries it instead. If magic is dangerous, so too is the Clarkian escalation of technology into magic.

Other side quests have you teaching characters how to grow food without magic, how to graft morbols into other plants to help them grow, how to power forges without the use of crystals, and teaching people how to defend themselves with their swords and democratic governments. It is telling, then, that the penultimate villain is a monarch who surrendered his will entirely to Ultima and who called himself the Last King. In Ultima's world, and in the player's world, monarchs give way to fantasies, gods, and dependence. In Clive's world, monarchs give way to communities, democracies, and independence.

Clive's legacy, hinted at in the final scene, is a world in which people can fend for themselves without relying on Ultima, but it is also a world in which fantasy can lead them back into dependence and reckless excess.

Deconstruction, in the sense of literary criticism, works best when it highlights the contradictions and tensions inherent in a body of work or in a genre. At a personal level, I relate most to deconstruction that comes from a place of intimacy, sitting within a genre and all its flaws, and expressing its creators' deep and unabating desire for their art to be better. Within video games, that loving and intimate deconstruction has been put forth by titles such as Metal Gear Solid 2, NieR:Automata, and more recently, Tales of Arise; TVTropes suggests far more examples as well. After thirty-five years of the series, though, Final Fantasy has plenty to deconstruct within itself, and FFXVI steps up to do just that.

By comparison with deconstruction of genres of novel, film, or TV shows, video game deconstructions tend to center more heavily on the idea of ludonarrative dissonance, the tension between plot and mechanics. In Spec Ops: The Line, that dissonance plays out as a metaphor for "just following orders" to commit war crimes. In Tales of Xillia, the player is given an immensely powerful ability, but every use of it increases the chance of getting the bad ending in which the player causes extensive and extreme harm to untold millions, such that the only option that's consistent from a moral perspective is to simply not use that ability at all. In the case of Final Fantasy XVI, the conflict between Clive and Ultima is also a conflict between Clive and the player, such that the plot centers heavily around the concept of free will, submission to the divine, and submission to state power.

There, Final Fantasy XVI finds familiar ground not in video game deconstruction, but in the 1995 anime series Neon Genesis Evangelion. Both are deep deconstructions of their respective genres and media, centering deeply on free will and submission with divinity as a metaphor for coercion and force. That FFXIV directly acknowledges Evangelion without making the plot depend on that reference deepens the deconstruction, as it serves to remind the player that the game is in conversation with a wider culture of stories as well as its own numbered predecessors. The reference, together with a later scene in which Ultima tries to break down Clive's will in ways that closely echo one of the darker endings to Evangelion, also acts as an in-text confirmation that the deconstruction is intentional. What Final Fantasy XVI has to say about ludonarrative dissonance and the act of embodying a character through playing a game, Evangelion had to say about the demands fans make of anime. In both cases, participation is problematized and put under critical examination.

Deconstructions land best when they offer the potential for reconstruction, when the destruction that they apply to their own genres and media is in service of the creation of something better. If the player in Tales of Xillia resists pressing the button that gives them immense power, then peace becomes possible. If everyone playing Metal Gear Solid V across the world can agree to never build nuclear weapons in-game, then peace in the real world becomes possible. If everyone playing Final Fantasy XVI can reject technoutopian fantasies in favor of true community, then we may yet find that climate conflict is not inevitable — true peace may be possible.

To the extent that it is not limited by its refusal to surpass its own limitations and harms, Final Fantasy XVI succeeds by showing us hope that comes from depending not on state power or magical fuels, but on each other. Clive wins by relying on his brother, his childhood friend, his network of like-minded activists, and even his own former enemies. The game dares us to believe, if only for a moment, that Ultima and the real-world submission that he stands in for cannot defeat true and loving community, no matter how seductive his fantasies are.

The Final Fantasy series has seen the player act as terrorists, theatre performers, military school students (twice!), religious pilgrims, revolutionaries, the cast of Star Wars: A New Hope, and more, all the while giving us hope even in its bleakest of plots. With Final Fantasy XVI, we are invited to put down the controller, and act in the real world; to put into practice the boldest of hopes, and to make final the seductive fantasies that we are held captive to.

In today's newsletter, I'll lay out my case for why progressives should, as a matter of political principle, do the work needed to embrace and work to improve the fediverse. By necessity, making that argument cannot involve endorsing the fediverse in its current state as institutionalizing racism and other forms of hate, but rather this argument implores progressives to get involved, to build communities on the fediverse, and to do both the social and technical work needed to make those communities inclusive of all races, genders, orientations, and physical and mental disabilities.

At the same time, I also want to clarify that this argument is not intended to preclude the potential for building networks that are more consistent with progressive ideals than the fediverse. No honest expression of progressive ideals should ever posit a terminal state for societal improvement; we are a flawed society, and the nature of progressivism is to continually improve society to redress those flaws. Rather, I would argue that the fediverse in its current form is a baseline for what a progressive view of the internet should be: a flawed and problematic thing but that can be improved at a structural level through political and community organizing.

My argument will also necessarily involve some degree of technical jargon. While this argument is not a technological argument, and is not intended to be read exclusively by technical experts, it is absolutely and unavoidably true that technology is increasingly the lever used by capital to enact societal change. Understanding that fulcrum is essential; to borrow and rather mangle a phrase from Nora Tindall, progressiveism does not demand that you need to be able to repair your car, but it does require you to understand that it burns fossil fuels. Similarly, some level of jargon is necessary as a matter not of political rather than technical literacy.

I also need to stress that this is not a how-to guide in any form. It's a polemic, a political argument, and hopefully a well-reasoned rant, but it isn't a tutorial. For that, I highly recommend Kat's Mastodon Quickstart for Twitter Users by zkat.

Finally, I want to deeply thank Sarah Kaiser and Nora Tindall for feedback on drafts of this post.

With those clarifications in place, it's worth starting by expressing what we demand out of any progressive approach to social media. I posit that as progressives, we should demand no less than we do in any other political arena: durable improvements to society that work at a structural level to preclude injustice in the future. Whereas liberalism tends towards fixing problems in a symptomatic way, perhaps by the allocation of aid packages, tax credits, and other targeted action, progressive politics generally demands that immediate fixes are paired with structural changes that will help prevent the same or similar problems from occurring in the future. To approximate nearly to the point of mutilation, liberals are concerned with winning elections, while progressives are concerned with expanding voting rights. Liberals want to fire bad cops, progressives want to reduce police budgets and keep military-grade weaponry out of law enforcement hands. Liberals want to offer free bus fare to low-income riders, progressives want car subsidies to be applied to public transit instead.

Liberals and progressives tend to agree that it is bad to post to Truth Social, Parler, Gab, or other right-wing Twitter clones, but it's what comes next that separates the two philosophies. Liberals want to replace Twitter with a corporate social media platform run by good billionaires, progressives should want to build something better.

To build something better than the next Twitter requires understanding what went wrong with Twitter at a deeper level than what generally fits in, well, a single tweet. It's tempting to say that the problem with Twitter was that it was bought out by the richest man on the planet, and that said man is a virulent transphobe, a COVID-19 denier, a fierce opponent of labor rights, and a right-wing operative who helped launch DeSantis' campaign. All of these are true, and liberal political philosophy would be right to focus on these as problems that require a response. It is true that Elon Musk has turned Twitter into a fairly explicit right-wing disinformation network, but progressive political philosophy both invites us to and demands that we understand that to be symptomatic of deeper structural problems.

In particular, and I cannot emphasize this enough, the problem is that a single person can simply buy a significant fraction of all human communication. Progressive politics does not generally oppose corporate interests as a matter of aesthetics or us-versus-them sort of thinking, but as a recognition that centralized control of social organizations by unaccountable bodies is in general somewhere between bad and catastrophic. Privatization of public utilities is bad because they allow centralized control over the pricing and availability of basic infrastructure. Monopolies are bad because centralized control over markets creates wildly unjust economic inequality. Centralized social media is bad because it allows a small number of unaccountable owners to dictate how people communicate and advocate for their values.

That Twitter was bought out by a particularly egregious asshole makes the problem obvious and urgent, but it neither started with nor ends with him.

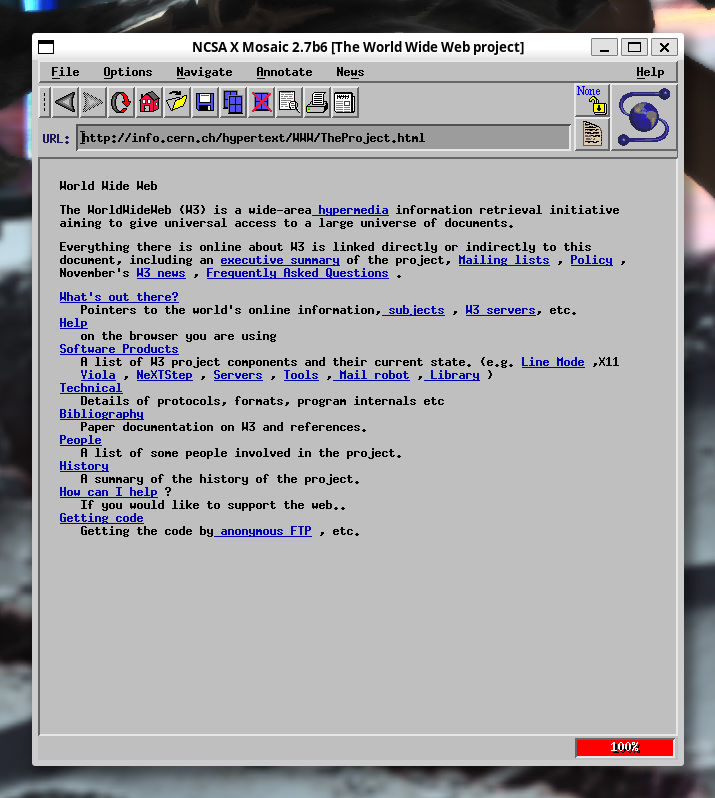

To a progressive, structure matters, so to understand what went wrong with Twitter, let's rewind back to when the Internet was structured very differently. (Here is where the technical jargon comes in, by the way. I promise that it's as minimal as I can make it while still staying true to my own progressive politics.) To browse the Web in 1993, you might do something like launch NCSA Mosaic, an early Web browser. You might then type something into the address bar (labeled "URL" in Mosaic) like http://info.cern.ch/hypertext/WWW/TheProject.html, and press Enter.

With the magic of the early-90s Internet, you'd have then gotten a window very much like the one above. To do that, your computer would have used the address you provided to make a request to another computer somewhere else on the Internet. A computer that is configured to reply to that kind of request is called a server; back then, many servers were the same kind of computer you might have on your own desktop, with nothing especially fancy about them.

Each address tells your browser three distinct things: how to ask the other computer for your page, what computer to ask, and what page to ask them for.

The first part, how to ask them, is sometimes known as the protocol, and is key to what makes the Internet what it is. Your computer and the server may be made by different companies, may run different software, may have been made at different times, and so forth, but they can still talk to each other because both sides have agreed to speak the same protocol. Today, almost everything goes through either the HTTP or HTTPS protocols, so this part of the address is slightly redundant, but it's nonetheless critical to allowing your browser to talk to whatever other website it wants even if that server doesn't know anything about your computer.

Next, your browser back then could talk to any server that was available on the Internet. Universities, municipal governments, Internet service providers, and other organizations may each have made their pages available on servers that they owned and controlled, so you needed a way to tell your browser what computer to talk to. The details are complicated and kind of besides the point, but computers that want to serve things on the Internet are generally given names; in this example, info.cern.ch. Your browser can use that name to look up what server to talk to, again not needing to know any other details about that server.

Finally, the last part of that address specifies what you want that server to give you. This part is generally up to the server, and acts as the name of a specific page on that server.

Each part of that address exists because, above all else, the early Internet was interoperable. Your computer and whatever other servers you wanted to talk to didn't have to share anything beyond a basic agreement about what protocol to use and how to look up names. Both of those were owned by standards bodies, such that anyone could make a new browser or new server software without having to ask anyone for permission, and without having to update everything else on the Internet.

There were some bitter, bitter fights to make the Internet less interoperable, but that speaks to the immense importance of interoperability as a progressive value: at a technical level, it prevents any single piece of software from precluding any other software from working. At a social level, that guarantees that a single company cannot control what is and isn't allowed on the Internet.

Somewhere along the way, though, things changed. Servers had to get bigger and bigger to handle all the new computers on the Internet making requests, pages got more and more complex instead of just having text and some occasional images, companies offering stuff on the Internet got bigger and bigger, and most browsers are effectively made by Google. Now, if you look at your address bar in Chrome, Safari, Firefox, Edge, or whatever else, you might see something like https://twitter.com/cgranade/status/1643684152280231936. That address breaks down in exactly the same way as before, telling us that our browser used the HTTPS protocol to ask Twitter for a specific tweet that I wrote.

The trouble is, every tweet will have an address that's not too much different than that. Back in the 90s, if you wanted to learn about particle colliders, you'd go to cern.ch, and if you wanted to learn about your local city government, you might go to something like seattle.gov.

Increasingly, though, you might go to https://twitter.com/CERN or https://twitter.com/CityOfSeattle. In both cases, your computer has to ask twitter.com and only twitter.com for what is at that address. Whomever owns twitter.com can decide on everything your computer knows and sees about Twitter as a social media service. Musk buying the company that owned that one, single server (made up of untold numbers of actual servers all owned or rented by Twitter and working together to pretend to be a single server when talking to your computer) was enough to take control of all communication that happened on Twitter. Here, we come again to the progressive idea that it's not enough to respond to Musk by trusting all our communications to a different single server that can be bought out by a single other billionaire, but that we must instead go even deeper to understand how we got to this point in the first place, and what we can do better at a structural level going forward.

Much of the centralization of the Internet started happening with a set of technologies that collectively became known as "Web 2.0," allowing pages to act more like software in their own right, such that twitter.com isn't only the name of a server giving you different pages, but also an entire software application for interacting with those pages. Unlike the early 90s Web, your browser no longer gets pages from different servers, it gets software that often can only interact with specific servers.

The situation is even worse on phones and other kinds of embedded devices like game consoles, smart TVs, and so forth. If something has a Twitter app, that is software that can only talk to twitter.com; even your browser can still at least ask for software from other servers like facebook.com, but a Twitter app will never let you look at Facebook.

It didn't have to be that way, of course. We could have had a wonderful Web 1.9; all the nice new technology to expand what the Web can be without narrowing it down to a scant few servers controlled by an even smaller cluster of companies. Interoperability isn't enough to break down control over social media on the Web, though. After all, twitter.com is still delivered to your computer using protocols like HTTPS. While interoperability may allow for new software and new sites to participate in the Internet, it doesn't on its own guarantee that new users can participate without their words and images being owned, controlled, and moderated by a single unaccountable party. For that, to really realize a progressive vision for what the Web could be, we need to go beyond interoperability to federation.

Interoperability has always been important to the Web, but as progressives we need to look at how interoperability didn't protect against centralized control over how users interact with the Web. Interoperability on its own was far more about preventing any one software vendor from building a monopoly, but it doesn't at all preclude one social media platform from controlling communication and bending it to serve explicit right-wing causes.

I posit that the problem is that while interoperability focuses on a dichotomy between users as consumers and servers as publishers, that's not how most people use social media. Rather, people use social media to be, well, social; to talk to other users. On social media, you don't just read what other people have written, you build up lists of whose writing you'd like to read and even post your own. Interoperability between various kinds of software on its own doesn't guarantee that if you post to one server, another user can read it from a different server.

To achieve that, different servers need to talk to each other using an interoperable protocol; we generally call this kind of interoperability federation. If that all sounds a bit arcane, this is precisely how e-mail works. If you log into GMail and write an e-mail to my consulting company at cgranade@dual-space.solutions, that address isn't just how you find a server, but rather refers to a particular user on that server. The server at gmail.com uses that information to find dual-space.solutions and deliver your message to the user cgranade, allowing us to communicate with each other even though I don't use GMail.

From a progressive standpoint, that last part is critical: your use of GMail, Outlook.com, or whatever other e-mail service doesn't apply any pressure for me to use the same service provider. In particular, I don't use GMail for privacy reasons, and so the fact that e-mail is a federated protocol means that my boundaries around privacy and security are better respected than if I needed to use the same e-mail server in order to communicate with you.

That's not merely theoretical, either. If you want to text someone, there's a dance that's almost rote by this point, working out whether to use Twitter DMs, Discord messages, Facebook Messenger, Signal, or whatever else. Each of those services requires that you communicate only with other users on the same service. While you can send me an e-mail from GMail, you cannot send me a text from Facebook Messenger. (Matrix is, however, is federated in a similar way to e-mail, but that's somewhat beyond the scope of this post.)

Thinking to a progressive response to the right-wing takeover of Twitter, then, a social media platform that is robust to future right-wing takeovers is one that is both interoperable and federated. Thankfully, such a platform exists, and has existed since about 2017: the fediverse.

Perhaps a silly name (though arguably no sillier than the early-00s "blogosphere"), but the term refers to the loose network of social media servers that communicate with each other using a protocol known as ActivityPub — the choice of server-to-server protocol is irrelevant if you're posting to the fediverse or reading someone else's posts, just as it's irrelevant to e-mail that servers communicate with each other using a protocol known as SMTP. Rather, what matters is that if you're using mathstodon.xyz to read my social media posts, you can ask mathstodon.xyz to follow @xgranade@wandering.shop, causing the server at mathstodon.xyz to communicate with the server at wandering.shop. Just as with e-mail, we don't need to use the same server to communicate and share posts with each other.

Servers that participate in the fediverse this way (sometimes called instances), can be powered by any number of different software packages. An instance can use Mastodon to offer a Twitter-style social media feed, but users of that instance can follow users not just on other instances running Mastodon, but also Pixelfed, Calckey, GoTo Social, Lemmy, KBin, Bookwyrm and other kinds of instances.

Critically, though, that federation is not unlimited or without restrictions. In order for federation to respect consent, servers must also be able to refuse to federate with other servers on a case-by-case basis. If a server is only used for sending spam, I can make sure that my own server blocks it. In the social media context, an instance that hosts Nazi content should not be allowed to federate with other instances. Blocking another instance this way is called defederation, and is as much an expression of community boundaries and a proactive consent culture as it is when you block accounts from your own account.

If this all sounds complicated, let me once again appeal to progressive values, but in a more crass way this time: it fucking should be. This, by the way, is where we get to the fossil fuel part of the analogy that I borrowed at the start of the piece. The modern Web did not become simple by eliminating technical complexity, but by consolidating ownership. While a Mastodon instance like wandering.shop or mathstodon.xyz can participate in the fediverse with any other instance that talks using ActivityPub, Mastodon-powered or not, twitter.com runs Twitter, is owned by Twitter, can only be used in the Twitter app or from twitter.com, and talks only to Twitter. That's not simplicity, it's a monopoly.

Just as with the dominance of car culture over public transit has severe implications for climate change, that monopoly has drastic and horrifying consequences for progressive causes. The Internet and the Web are extremely powerful tools, to put it mildly. They have enabled entire generations of queer people to find each other, but they have also led directly to literal genocide. They allow for progressive organization and advocacy at unprecedented scales, but also have enabled the incredible escalation in fascism embodied in Trump's and DeSantis' campaigns and policies. We don't get the good uses of the Internet without challenging the monopolistic structures that underpin so much of the modern Web.

Progressivism is about action, though, and thus demands that we do the work to understand that complexity — the same complexity hidden by coercive monopolies — so that our tools don't once again become tools of right-wing reactionaries.

That's necessary but not sufficient, of course. The structure of the fediverse is a structure that helps resist right-wing takeover and control of communication, but it on its own isn't sufficient to realize other progressive values, especially antiracism (to wit). Thus, progressivism has a few more demands to make of us. We need to advocate for better moderation policies on instances that we use and interact with. We need to raise awareness of where the fediverse isn't living up to what we need it to be. We need to sponsor efforts to make the fediverse better, more inclusive, and less hateful. We need to listen when people tell us we're not meeting that goal.

Perhaps, most of all, I strongly believe progressivism demands that we do not shut the fuck up. We need to be loud about why the fediverse is necessary, why making it better is necessary, what goes wrong with centralized social media, and most of all, what we can do together to truly make a progressive ideal of social media work.

In keeping with my love of hot takes, let me skip straight to the end: if you're writing a popular news article about quantum computing, the best way to explain what a qubit is is to just... not do that.

As fun as dropping a Granade-signature hot take and running away is, let me unpack that a bit, though. Open up a random news article about quantum computing, and it will likely open up with some form of brief explanation about what quantum computing is. Normally that will start with a sentence or two about what qubits are, something along the lines of "qubits can be in zero and one at the same time. Besides being so radically untrue that I included it in my quantum falsehoods post from a few years ago as QF1002, it just isn't very helpful to someone reading a news article about some new advancement. It's a bit like insisting that any popular article about classical computers should start out with a description of TTL voltages. All fascinating stuff, but a two-sentence summary at the start of a story just isn't the right place to delve into it.

In particular, it doesn't give the reader what they need to know to put quantum computing news into context: what impact are they likely to have on society, what challenges might preclude that, who is building them, and how do their interests relate to the reader's own interests? That these are generally regarded as more specialized details means you get a never-ending parade of folks who think quantum computers can instantly solve hard problems (they can't; that s QF1001), that quantum computers are faster classical computers (they aren't), or that quantum computers will be commercially viable in the next few years (they won't).

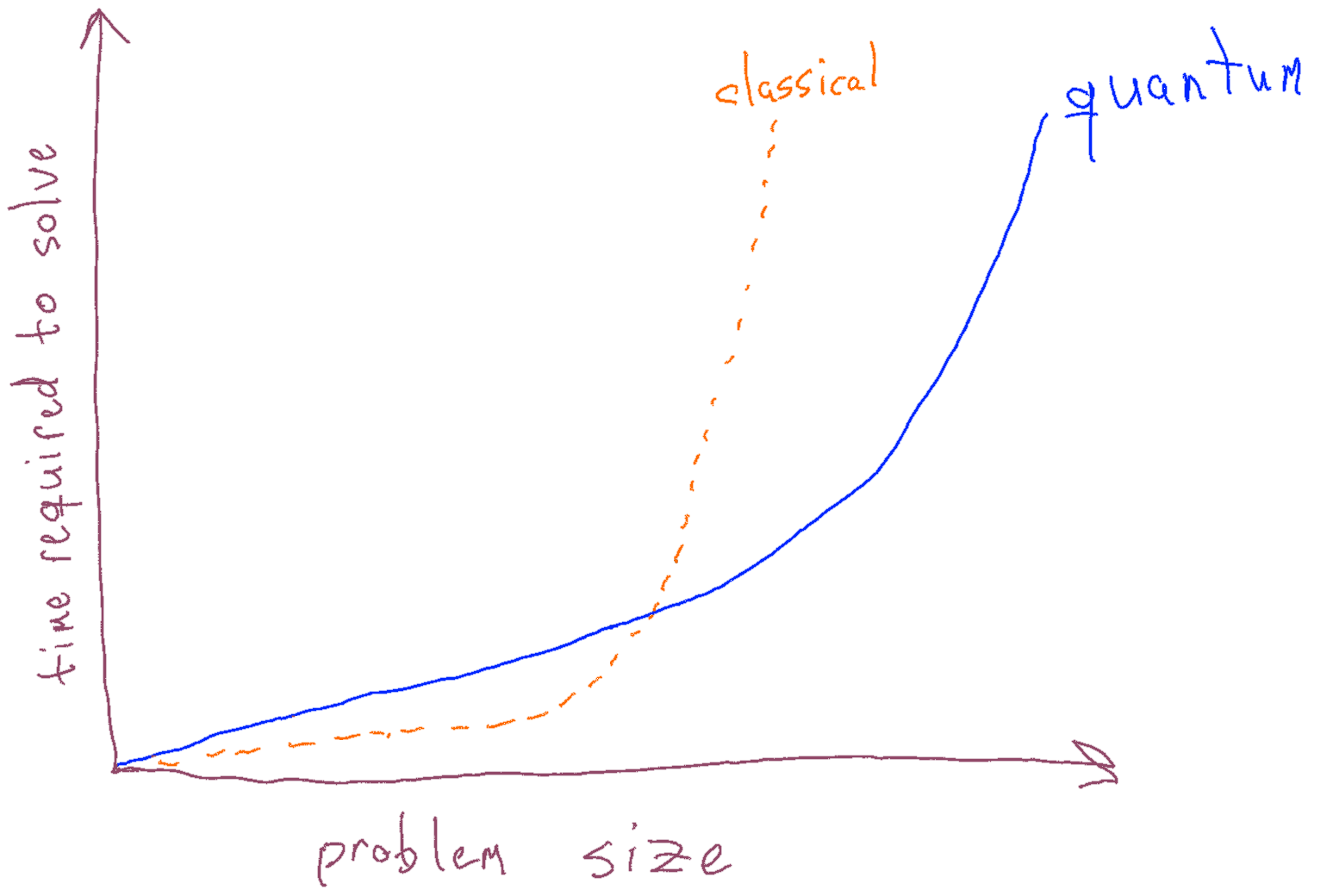

I'll offer that a much better way is to open with some version of this graph:

I sketched this (pretty badly, too, sorry; I'm much better at doing quantum stuff than I am at drawing) to indicate that it's really quite schematic. The particulars are very important, but depend on the kind of problem you're interested in — the shape of those curves depends heavily on the problem you want to talk about. For any problem that allows for a quantum advantage, though, you'll have a plot of that form, showing that for small enough problems, classical computers will still be faster, but as problems grow, quantum approaches look more and more appealing. As classical computers get better, that crossover point moves out, but critically, the overall shape is a property of the problem and the algorithm used to solve it, not of the specific devices used.

Right away, this kind of plot already tells the reader a few very important things:

It's not that it doesn't matter what a qubit is — it absolutely does — rather, it's not the right context readers need to make sense of the endless stream of excitement, hype, progress, and misinfo thrown into the blender of modern news feeds. It's much more important and useful to provide that context directly than to perpetuate the same falsehoods.